Voice Video Manipulator

View on GitHubProject Overview

VVM was my first venture into ROS2 development, combining voice commands, computer vision, and robotic manipulation. This project demonstrates how AI can make robots understand and respond to human commands naturally.

How It Works

The system works in three main steps:

- Listen: Voice recognition converts speech to commands

- See: Computer vision detects and identifies objects

- Act: Robotic arm performs pick-and-place operations

Say "pick up the red ball" and the robot will find the red ball, calculate its position, and grab it!

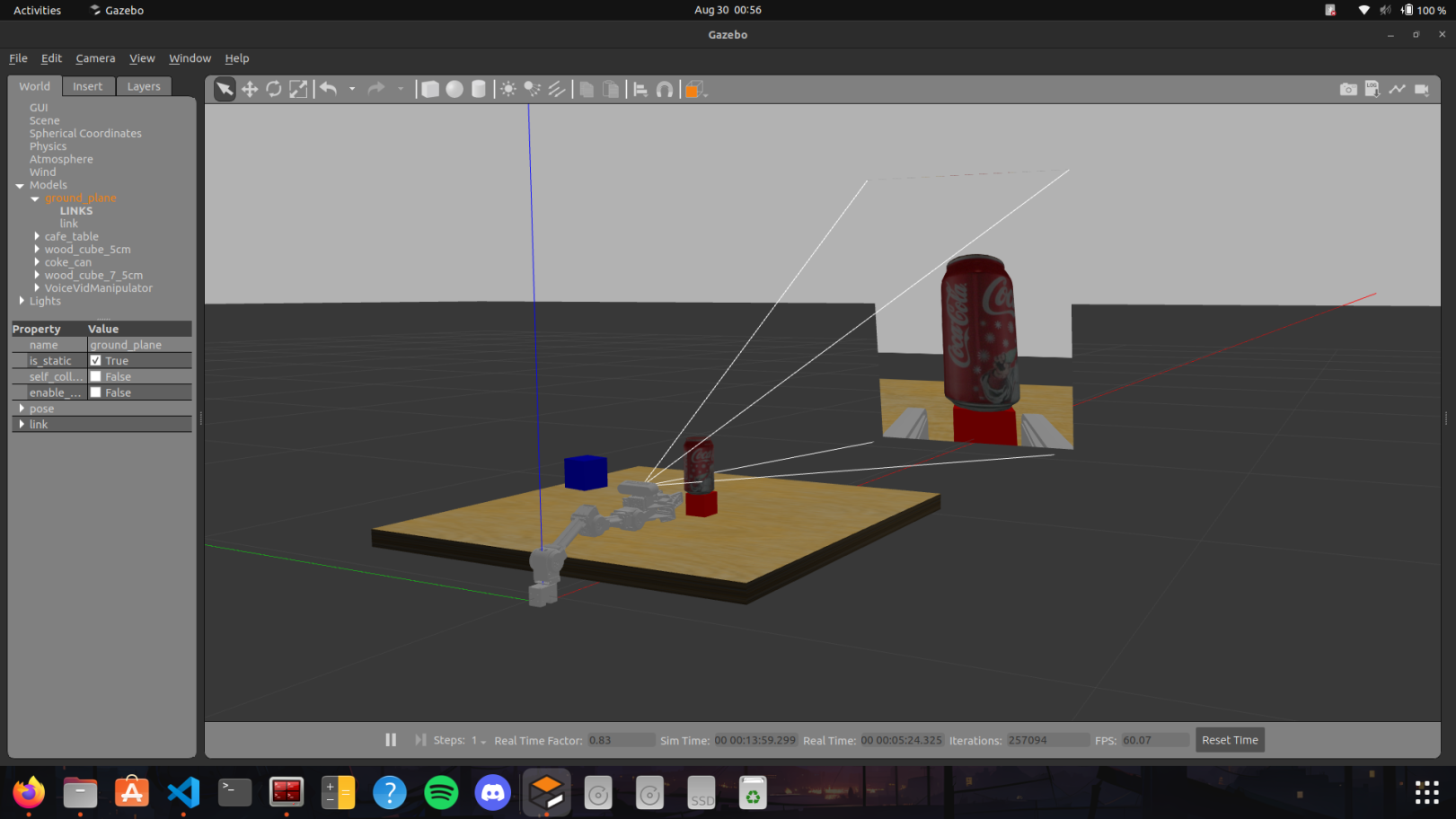

System in Action

Voice Video Manipulator system architecture

Object Detection System

Object detection and distance calculation in real-time

Computer Vision Pipeline

The vision system processes camera feeds to identify and locate objects:

- Real-time object detection using machine learning models

- 3D position estimation from 2D camera coordinates

- Distance calculation for precise manipulation

- Object classification for command matching

Complete Voice Control Demo

Complete voice-controlled pick-and-place operation

Technical Implementation

- Voice recognition for natural language command processing

- Computer vision for object detection and identification

- 3D distance calculation for precise positioning

- Inverse kinematics for robotic arm control

- ROS2 integration for modular system architecture

- Gazebo simulation for safe testing and development

Built With

Key Features

- Natural language voice commands

- Real-time object detection and classification

- Automatic distance calculation

- Precise robotic arm manipulation

- Gazebo simulation environment

- Modular ROS2 architecture

Challenges Solved

- Voice Recognition Accuracy: Implemented robust speech processing to handle various accents and background noise

- Object Detection Precision: Fine-tuned machine learning models for accurate object identification

- 3D Position Calculation: Developed algorithms to convert 2D camera coordinates to 3D robot workspace

- System Integration: Successfully integrated multiple AI components in a single ROS2 system

My First ROS2 Experience

This project was my introduction to ROS2 development. It taught me how to:

- Design modular robotic systems

- Integrate multiple AI technologies

- Work with simulation environments

- Handle real-time data processing

- Debug complex multi-component systems

The experience gained from VVM became the foundation for all my future robotics projects.